The secret language of behavior

Speaking in San Diego at last November’s Society for Neuroscience meeting, Sandeep Robert (Bob) Datta described how his computational analysis of the behavior of mice, currently the only published study of this kind on mammals, reveals a modular structure. He emphasized the unsupervised nature of the machine learning he trained on the 3-D pose dynamics of freely moving animals. A subsecond structured pattern fell out of the data themselves – initially derived from videos of mice placed individually in buckets – but the same temporal patterning was detected in other environments as well.

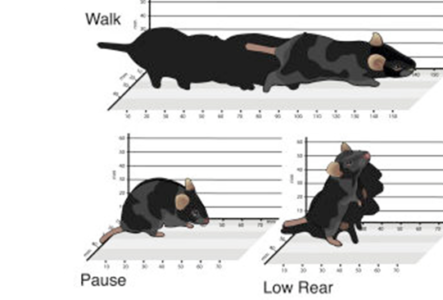

Mathematical modelling of the data identified a repertoire of about 60 syllables, each with a characteristic trajectory in pose space lasting on average 300 milliseconds, and separated by dynamic transitions. Changing the environment – for example putting fox odor at one spot in the arena – provoked aversive behavior comprised of the same modules but with different frequencies and transition probabilities.

The study, described in a video (below) from the Datta lab, has now been extended in unpublished work to demonstrate how dissecting behaviour by this approach provides a sensitive read-out of the effects of drugs and disease mutations.

In an experiment in which each of 500 mice was given one of 15 commonly used psychoactive drugs, Datta’s analysis using his model finds that each leaves a different behavioral fingerprint, diagnostic of the particular drug an individual mouse had been given.

His behavioral phenotyping of two genetic mouse models for autism – CNTNAP2/- and 16p11.2 –recently reported as hyperactive after a battery of traditional behavioral assays, reveals clear differences in the alterations of behavior shown by the two mutant strains. In each case there are differences with wild-type mice in the usage of 8 specific syllables, but with the exception of one overlap, the affected syllables are distinct for the two genetic models.

The analysis also provided insight (sadly disheartening) into the effects of the drug risperidone which has shown efficacy against the CNTNAP-2 phenotype in mice and is an FDA-approved drug used to treat irritable autism (and schizophrenia and the manic phase of bipolar disorder). Of the 8 syllables affected by CNTNAP2 deficiency, only one is similarly affected by risperidone, while other normal syllables are also used less frequently. The results imply that the drug, at least in mice, is acting as a sedative rather than correcting circuit defects caused by the mutation.

With a company spin-of (Syllable life science), exploitation of this new ability to decipher the body language of mice for therapeutic benefit is already underway, while for researchers Datta’s model is freely available ( an announcement greeted with spontaneous clapping by symposium attendees).

Linking behavior to neural circuits

There were many other presentations involving computational ethology at the meeting, and several illustrated how automated tracking can be combined with modern tools for monitoring and manipulating neural circuits to give insights into neural circuit function.

In earlier published work from his time as a postdoc in Joshua Shaevitz’ lab at Princeton, and done in collaboration with Bill Bialek, Gordon Berman used unsupervised machine learning to probe the ground-based social behavior of fruit flies, identifying 117 stereotypic motifs, each lasting on average 0.21 seconds and linked by transitions of average duration 0.13 seconds.

Mathematical modeling of these data indicates a hierarchical organization over different time scales, in keeping with current models for the organization of neural circuitry in Drosophila. Berman is now tackling the challenge of deciphering the neural command code for these behaviors at the point where it is compressed into the descending axons of the neck (100-fold fewer than the number of neurons in either the brain or rest of the body), collaborating with Josh Shaevitz, Jessica Cande, Gwyneth Card and David Stern to analyze the behavioral effects of optogenetically stimulating these axons.

Thanks to Janelia Farm’s mission to develop and share tools for the research community, there are 2,215 GAL4 Drosophila lines that can drive the cell-type specific expression of genetic constructs, such as the light- or heat-activatable channels used in opto- and thermogenetic experiments.

A neat example of applying these technologies was given by Brian Duistermars, who has tracked and analyzed the threat displays of male fruit flies, pinning the control of this behavior down to six neurons capable of directing all or part of it according to the intensity with which they are stimulated.

A broader, more systematic screen for altered behaviors after thermogenetic activation in 2,200 GAL4 lines was described by Kristin Branson. Her group has developed JAABA, an interactive machine learning approach that incorporates an element of supervision from biologists in analyzing animal behavior, and used this to classify 20 different ground-based social behaviors in Drosophila, whose modification can be detected computationally.

This has enabled the generation of brain-behavior maps that link high dimensional behavioral data to the manipulation of specific neurons throughout the nervous system. Though the results are as yet unpublished, the project features in the lab video (below) and true to the Janelia tradition, the group is working on ways of sharing the data through a browsable atlas of behaviour-anatomy map .

Zebrafish are another example of a model organism yielding new insights through computational ethology.

Gonzalo de Polaviejo studies decision making by zebrafish in a social context, using his published Id tracker software that reliably identifies individual fish as they interact. The fish engage in non-random social interactions from 5 days post-fertilization and develop their social behaviour while still transparent larvae, promising a useful model in which to look for neural activity correlates and test the effects of genetic mutations and drugs.

But mice remain the dominant model for biomedical research relevant to human disease.

Gordon Berman uses his unsupervised machine learning and modeling approach to study behaviour in mice and humans as well as Drosophila, and makes his models available on Github. Preliminary results on the behavior of mice in both social and nonsocial contexts, obtained in collaboration with RC Liu’s group were presented in this poster .

Megan Carey has developed LocoMouse to analyze the limb, head, and tail kinematics of freely walking mice and investigate the effects of perturbing cerebellar circuitry.

Azim Eiman uses unsupervised learning from machine vision to detect and reliably quantify subtle variations in reach and grasp behavior as he builds on his published work to understand how cerebellar circuits intersect with motor commands to fine tune these goal-oriented movements.

Adam Hantman applies the interactive machine-learning approach developed by Branson as he analyses the role of the motor cortex in controlling reach and grasp behaviour in head-fixed mice. His published work shows an impressive “pause and play” level of manipulation using optogenetics, and he is now looking to correlate the kinematics of the behavior with activity in the motor cortex and subcortical areas, a feat made possible by a large field of view (5mm) 2-photon mesoscope recently developed by Sofroniew et al 2016 ,which achieves simultaneous calcium imaging of different brain areas with single neuron resolution.

Overall, the message is that ethology is on course to catch up and connect with advances in monitoring and manipulating neural circuit activity. With the aid of sophisticated computational analyses, we are beginning to understand the language of behavior, the ultimate output of the brain.

- Economists listen to Ecologists: On Biology at the World Economic Forum - 9th March 2018

- The secret language of behavior - 31st January 2017

- Restoring a lost microbiome to a model worm - 12th May 2016

Comments