Brain-computer interfaces (BCI) are devices that measure signals from the brain and translate them into executable output with the help of a machine such as a computer or prosthesis. This technology has varied uses, from assistive devices for disabled individuals to advanced video game control.

BCI has been discussed by the media, and by science fiction television and films. Sometimes this technology is positively portrayed, such as the prosthetics seen in Robocop. Other perspectives warn about how far this technology could go, such as the literal plug-in brain interface in The Matrix, or the game in Black Mirror’s episode “Playtest” that can create a person’s worst nightmare. Though these examples are very futuristic, some companies have research goals that could be straight out of a science fiction movie: for example, Elon Musk’s company Neuralink plans to create brain implants that improve memory. The current reality of BCI, however, is more limited than suggested by these futuristic portrayals.

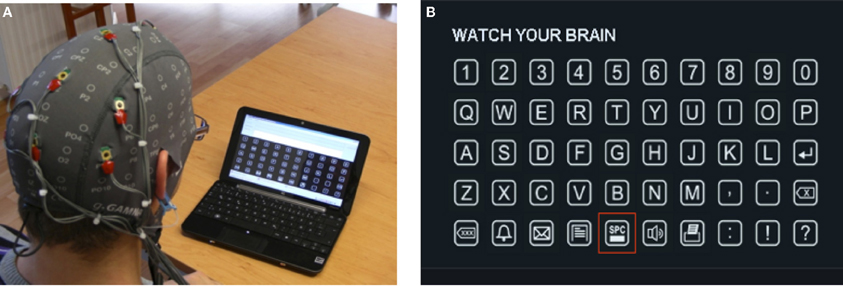

At this time, the main use of BCI pursued by researchers is as assistive technology for individuals with debilitating loss of motor control, such as that caused by amyotrophic lateral sclerosis and spinal cord injury. Late stage amyotrophic lateral sclerosis patients often enter a “locked-in” state in which they lose nearly all motor function, including the ability to speak. To help these individuals, BCI electroencephalogram-based spelling systems have been developed. These devices use electrodes to record brain signals from the surface of the scalp, and users can type messages by focusing on certain letters on a screen.

Another example of assistive technology is BCI motor prostheses, such as the robot exoskeleton built by Dr. Miguel Nicolelis and his colleagues that allowed a paralysed man to kick a soccer ball during the 2014 Brazil World Cup.

Ethical questions

The unique direct connection BCI creates between our brains and computers raises important ethical questions. We currently interact with computers with our peripheral nervous system: we use our fingers to type an email on our laptop, or our vocal muscles to produce speech and interact with voice recognition systems. In contrast, BCI captures signals directly from your central nervous system – your brain.

If a BCI device sees the thought and executes a harmful action, even though the user would have normally not acted in this way alone, can we say that the BCI user is fully responsible?

This has interesting ethical implications, ranging from questions of privacy to loss of humanity. One example is ascription of responsibility for the output of a BCI. Perhaps we have less control over our thoughts than over our actions – many of us have experienced thinking something, yet refrained from saying it aloud. If a BCI device sees the thought and executes a harmful action, even though the user would have normally not acted in this way alone, can we say that the BCI user is fully responsible?

Another ethical question is the potentially deceptive role played by the media on perception of BCI. Current BCI technology is not very reliable: spelling devices often cannot be controlled by fully locked-in patients for unclear reasons. In general, most motor-based assistive systems are far more effective than BCI for individuals who retain any motor function. An example is Stephen Hawking’s communication device, which he controls with minute facial muscle movements and prefers over BCI systems.

However, media coverage of BCI tends to be overly positive and futuristic, with the phrases “mind reading” and “cure” seen in articles. This misrepresentation can create an expectation gap where patients expect a BCI device to be more effective or simpler to use than it actually is. Disappointment stemming from overly high expectations could be associated with patient depression.

Many researchers see great potential in BCI devices, with implications for individuals struggling with severe disabilities and for the future of entertainment. However, the ethics literature indicates that these benefits could be accompanied by moral and societal challenges. It is therefore important that neuroscientists, legislators, ethicists, and the general public discuss the impact this technology could have on legal and moral responsibility, informed consent, and various other ethical issues. In our paper, we review these issues and call for greater public discourse.

Acknowledgements: Writing of this blog was supported by a joint grant from the Canadian Institutes of Health Research and the Fonds de recherche du Québec – Santé (European Research Projects on Ethical, Legal, and Social Aspects (ELSA) of Neurosciences) as well as a career award from the Fonds de recherche du Québec – Santé.

Comments