Artificial intelligence (AI) technologies have been widely used throughout the world since the AlphaGo defeated World Go Champion Li Shishi in 2016. Automated driving, intelligent robots, machine vision, face recognition and other similar tools all use artificial intelligence technology. Continual development of medical equipment means that now AI technologies have begun to be applied to medical research.

Burns can be life-threatening and are associated with high morbidity and mortality rates. Reliable diagnosis supported by accurate burn area and wound depth assessment is critical to treatment success. Traditional diagnostic methods, such as formula method and ellipse estimation method, can cause huge errors and cannot guarantee accurate patient treatment.

In recent years, scientists have developed computer aided equipment, such as digital cameras and three-dimension scanning devices, to be applied to the burn for proper diagnosis. However, these devices are complicated and often not suited for clinical use. In these circumstances, the researchers on our team managed to create a new segmentation method that categorizes burn wounds automatically by using AI technologies. This method can be implemented on a cellphone and more importantly, it can help avoid complicated operations in burn diagnosis. This research has been published in Burns & Trauma and is titled Burn image segmentation based on Mask Regions with Convolutional Neural Network deep learning framework: more accurate and more convenient. You are welcome to download and view it.

Burn Image Data-set

Using more data-sets in deep learning will increase performance. Working off this fact, the researchers teamed up with Tongren Hospital of Wuhan University to collect a large reserve of burn images.

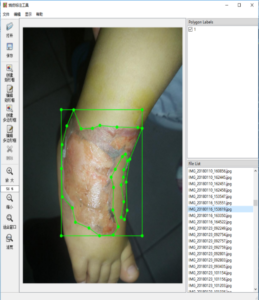

The process of generating burn wounds training data-set.

As shown in Fig.1, the researchers uploaded the images to a burn data-set. With the help of professional doctors, they used an annotation tool to classify the images

Segmentation Framework

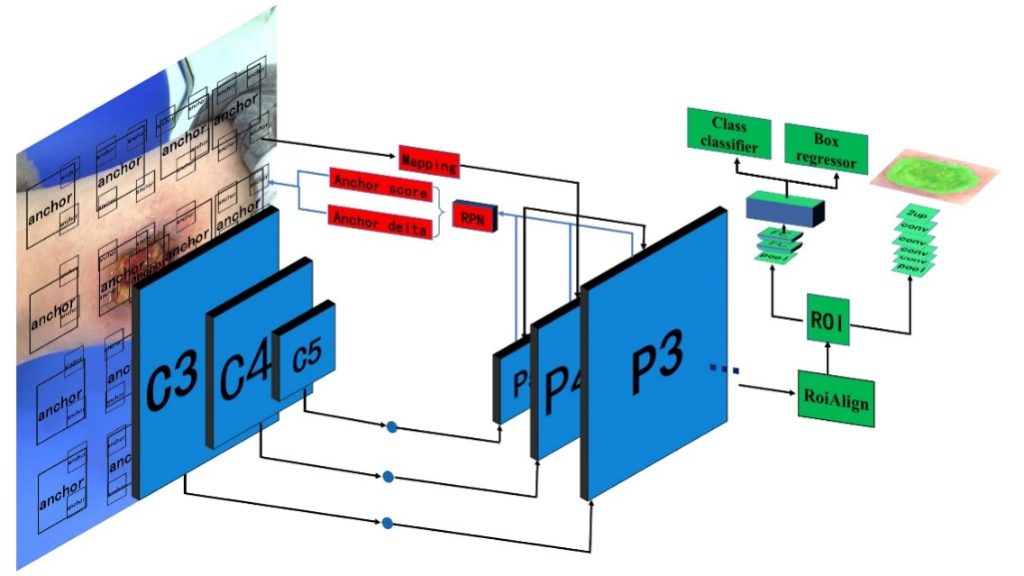

In this study, researchers employed the deep learning framework to segment burn wounds automatically.

As shown in Fig.2, this framework is based on the Mask R-CNN (Mask Regions with Convolutional Neural Network), which has become the mainstream framework in image segmentation. In order to get better segment results in burn wound images, the researchers modified the framework in two aspects. In one, the researchers combined the Residual and the Feature Pyramid networks to improve the ability of image feature extracting. In the second, the researchers replaced the multi-classification problem with the two-classification problem found in the FCN (Full convolutional neural) network. Furthermore, transfer learning was used to address the lack of burn image data-set in the training process.

Segmentation Results

Due to all the efforts applied, this framework was able to achieve excellent performance in segmentation.

The researchers tested the framework with 150 burn images. This revealed that the average DC (Dice’s coefficient) value can reach 84.51%. The results show that this framework is remarkable in its ability to identify different depths and sizes of burn wounds. Fig.3 shows the segmentation results in these burn wounds.

Although impressive, this framework cannot yet automatically recognize the depth of burns. For this reason, the researchers will continue to work on the diagnosis of burn depth in the future.

Comments