For several years, we have been studying the use of brain-machine interfaces (BMIs) to give people who cannot move their own arms the ability to guide a prosthetic device.

In the case of tetraplegia, or paralysis caused by injury or illness, the brain continues to generate movement-related command signals even though these signals can no longer reach the limbs to generate movement.

This loss of function can limit a person’s ability to independently perform many activities of daily living.

Restoring lost function

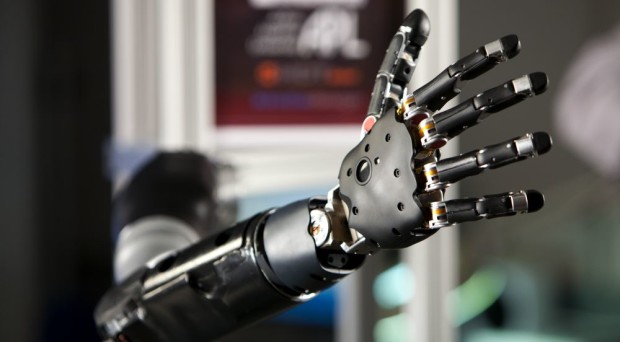

With a BMI,, we can create a direct connection between a person’s brain activity and an external device, such as a robotic arm, to restore lost function.

In our initial studies, we used intracortical microelectrode arrays to record activity from approximately 200 neurons in the motor cortex and showed that this type of BMI can be used to control a robotic arm with skill and speed approaching that of an able-bodied person.

Grasping and transporting an object is a complicated task that requires coordination of all of the joints of the hand and arm.

Grasping and transporting an object is a complicated task that requires coordination of all of the joints of the hand and arm. It becomes even more complicated if the person cannot feel what he or she is holding as is the case with a BMI – which often does not provide sensory feedback.

What did we do?

With our collaborators at the Carnegie Mellon Robotics Institute, we sought to make such tasks easier by using computer vision to identify objects in the workspace.

After the user reaches for the object, the robotic arm can be programmed to autonomously, or automatically, grasp it in a stable manner and allow it to be moved without being dropped.

In our study in the Journal of NeuroEngineering and Rehabilitation, we thus blended human intention, derived from the brain signals recorded from the BMI, and autonomous robotics to improve the performance of a BMI for reaching and grasping objects. We call this shared control.

Shared control allows the BMI user to maintain control over high-level movements, such as moving the hand towards the object and when to grasp, while the autonomous robotic system handles the details of the movement.

Shared control allows the BMI user to maintain control over high-level movements, such as moving the hand towards the object and when to grasp, while the autonomous robotic system handles the details of the movement, such as orienting the hand or keeping the hand open until it is in the correct position.

We blended these signals in such a way as to maximize the amount of control maintained by the BMI user, while minimizing potential frustration and reducing the task difficulty.

What did we find?

Both of the participants in our study were more successful with reaching and grasping tasks when using shared control compared to using just the BMI alone.

In addition to more accurate and efficient movements, participants rated the tasks as being easier when using shared control.

https://youtu.be/kg1WWq_8kpA

We also showed that it was possible to select from one of two objects in the workspace, which demonstrates that the user was still in control of the task.

https://youtu.be/0MlvDgPs_pM

Expanding on this in future work

Additional work needs to be done to expand the number and types of objects that can be recognized by the autonomous robotics system. We would also like the user to be able to choose whether or not to use shared control in certain situations and also allow them to interact with objects in different ways, such as picking up an object versus just pushing it along the table.

We will also continue to investigate the optimal way to balance control between the user and the automated system in order to provide high performance while ensuring that the user feels the device is reliable and responsive to their commands in many situations.

Comments