Live streaming has become a popular internet culture. Platforms like TikTok and Twitch have over 60 to 140 million monthly active users.

Virtually anyone can stream content on these platforms, which makes finding epic and funny moments challenging due to the sheer volume of seemingly mundane and lengthy videos.

In a new study published in EPJ Data Science, we show how artificial intelligence (AI) can help human editors quickly spot interesting segments of live streaming content.

This decision is collectively made based on audience reactions in chat messages, the structure of video frames, view counts, and streamer information. Among these, emojis and audience reactions act as critical components that guide the AI algorithm.

Deep learning is used to learn features of epic moments from multi-modal data to suggest interesting video segments with various contexts including victory, funny, awkward, and embarrassing moments.

When tested via a user study, this AI suggestion is found to be comparable to expert suggestions in spotting epic moments.

Using recommended clips as guiding data

To train the algorithm, we need guiding data that represent “epicness.” On Twitch, there are manually constructed “clips” or Twitch highlights that are 5 to 60 second long segments, contributed by streamers and viewers.

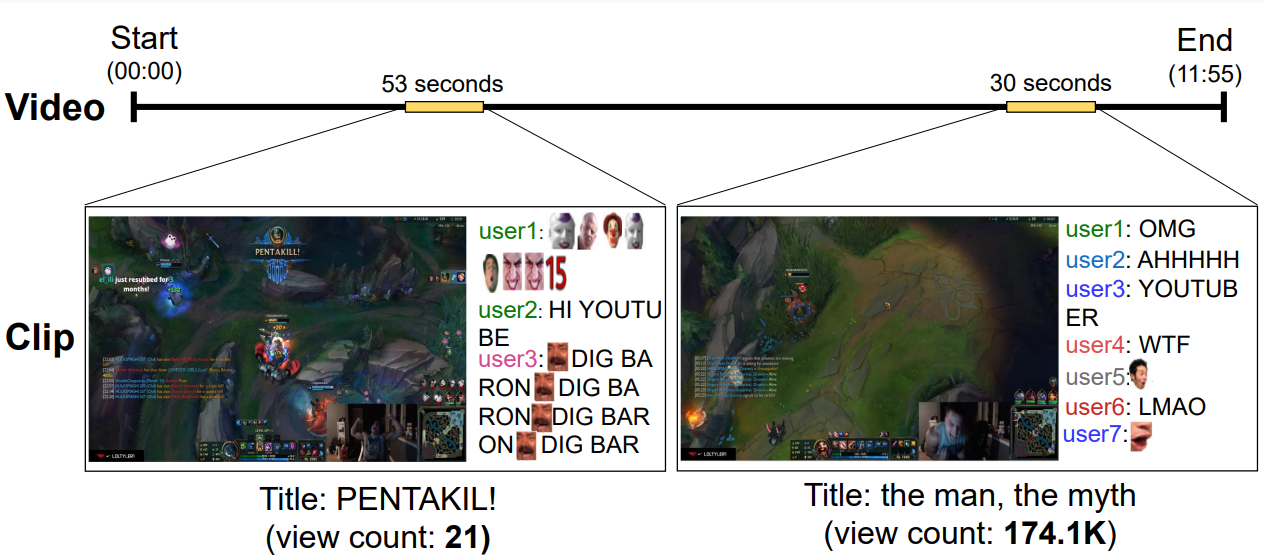

Figure 1 shows an example of live-streamed content that lasted 11 minutes and 55 seconds. Two segments of this content had been highlighted as recommended “clips”, running 53 seconds and 30 seconds each.

The second clip reached over 170,000 views indicating more epicness. The figure also shows user reactions to those chosen video segments. Emojis or Twitch-specific emoticons are commonly expressed in chat.

We collected two million user-recommended clips and the associated user conversations to understand the ingredients of epic moments. Our work defines epic moments as an enjoyable bite-sized summary of a long video content.

Epic moments are similar to video highlights in that they are both short summaries of long videos, yet the two function differently. Epic moments represent “enjoyable” moments whereas highlights are “informative” in nature.

Social signals as a cue for epic moments

We discovered that emotes and user reactions play a critical role in finding epic moments.

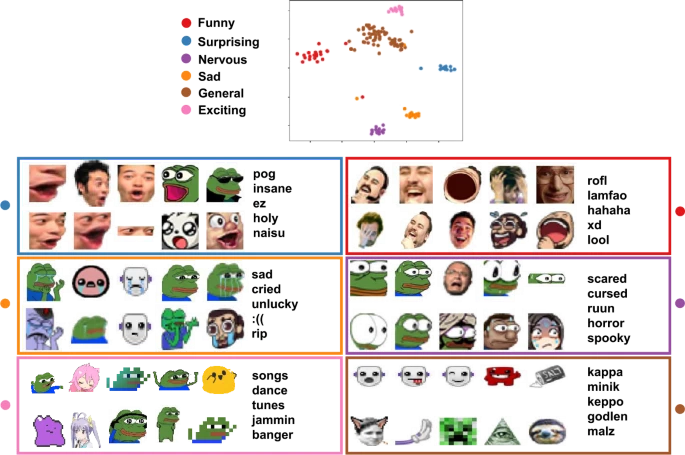

Figure 2 shows clustering results on emotes that appear in user chats on the two-dimensional space identified by the t-distributed stochastic neighbor embedding (t-SNE).

The color indicates a cluster’s category and the plot presents five example word tokens closest to each emote cluster. We can see similar-looking emotes function as emotional expressions on Twitch.

These insights are used to build a deep learning model called Multimodal Detection with INTerpretability (MINT), which merges and analyzes key features like chat, video metadata, and view count.

The comprehensive features from these three domains capture different aspects of epic moments, and combining those cues leads to a better prediction.

A user study also confirmed that the algorithmic suggestions are judged as enjoyable as human-recommended clips.

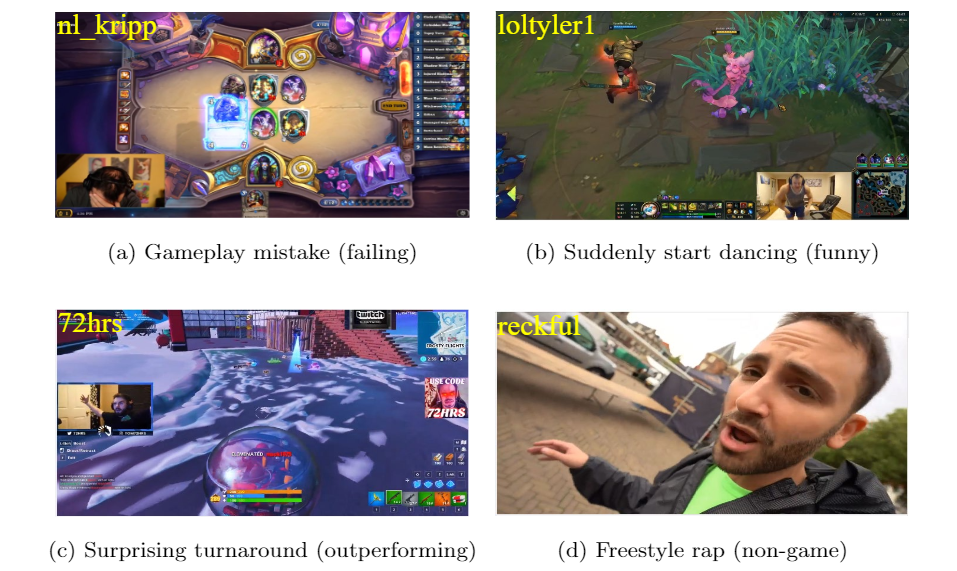

Furthermore, the algorithmic suggestions span various contexts such as failing game moments, funny dance moves, a surprising comeback during the game, and non-game moments, as shown in Figure 3.

By contrast, most human suggestions contained game winning moments.

As a growing population spends time watching live streaming content on the Internet, AI suggestions can help the editors and viewers discover epic moments.

Researchers interested in the codes for the MINT algorithm and the clip data set for training can find more information on our GitHub page https://github.com/dscig/twitch-highlight-detection

Comments