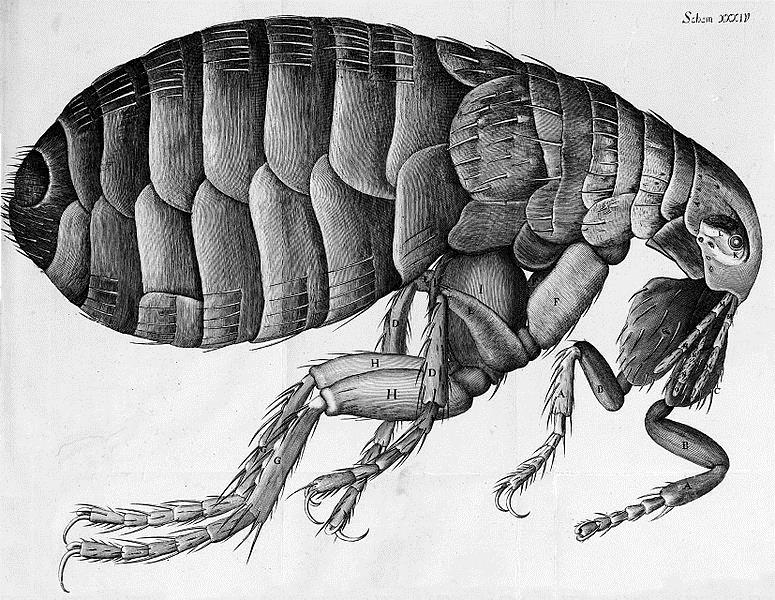

Observation is a key part of the scientific process. For many years significant effort was required to capture single scientific images, from meticulously hand-drawn views through a microscope (e.g. Robert Hooke’s sketches of fleas), through to photographic plates used in astronomy. These methods were all rather slow, allowing the scientists ample time to analyze and interpret each individual image.

However, with the advent of modern imaging systems that incorporate fast digital cameras and detectors, precision motion control and access to vast storage banks, the speed at which image data is captured has grown astronomically. This means that the scientists are no longer able to dedicate large amounts of time to each image – in fact, images can be generated so quickly in some systems that no single person could even hope to look at each image, let alone perform any significant analysis.

Many tasks are still beyond the capabilities of even the biggest supercomputers.

In the age of computers, it seems obvious that we should simply write some software that analyzes the images for us. However, due to the ease with which humans can understand and interpret meaning in images, we have historically chronically underestimated just how hard a task this actually is. In the early days of AI, a project to construct ‘a significant part of a visual system’ in software was expected to be sufficient to keep some students busy over the summer. This was in 1966 – fast forward over half a century and we still haven’t come close to solving this problem!

It turns out that humans are amazing. We can perform myriad visual processing tasks in the blink of an eye, effortlessly combining disparate concepts in a way that helps us understand complex visual scenes. While there is renewed hope that recent advances in AI, in particular so-called deep-learning methods, will eventually be able to approach human level image processing skills, many tasks are still beyond the capabilities of even the biggest supercomputers.

The rise of citizen science

Since humans consistently outperform machines for many visual tasks, in the interests of using the correct tool for a particular job, it makes sense to make use of human processing power in areas where they still trump all other methods.

But if you have a lot of images, you need a lot of humans. Coordinating the efforts of large numbers of non-specialist volunteers is known as citizen science or crowdsourcing.

This approach has been used to great effect in an ever-growing range of scientific arenas. One of the early projects, Galaxy Zoo, crowdsources efforts from an army of citizen scientists to classify the shapes of vast numbers of galaxies from telescope images. Along the way, citizen scientists even directly made brand new discoveries such as Hanny’s Voorwerp and Green Pea galaxies.

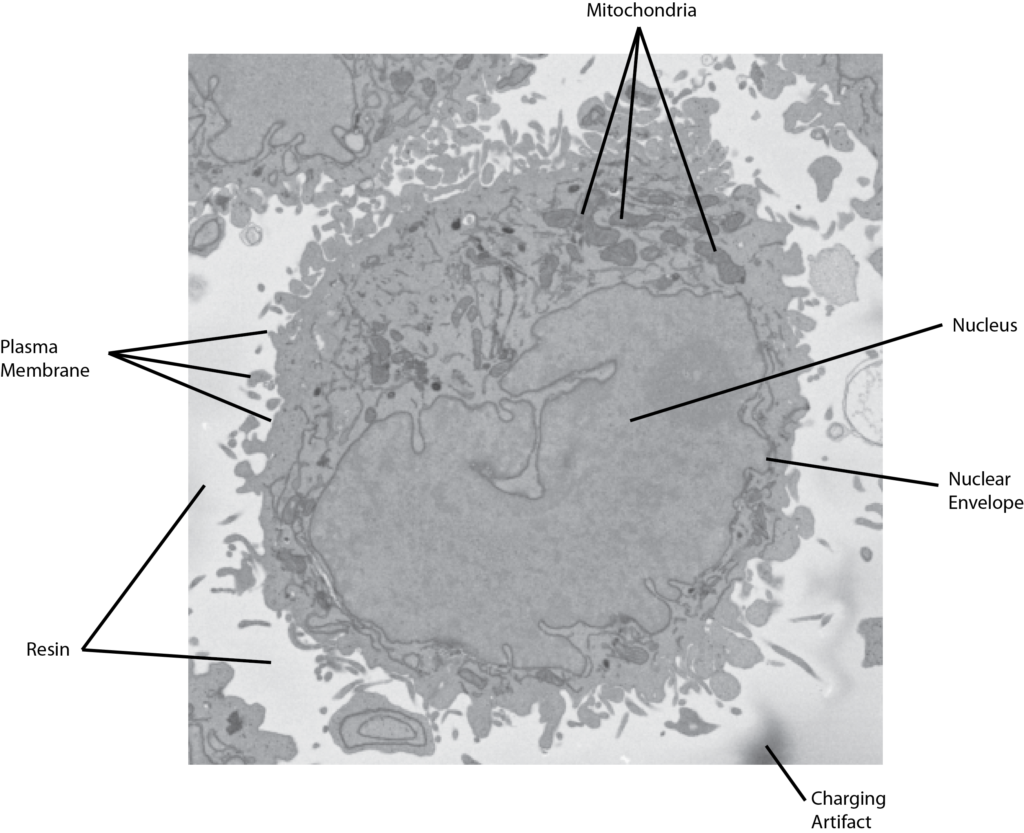

Following the success of Galaxy Zoo, the project grew into the Zooniverse platform that now hosts a variety of citizen science projects, including our own Etch a Cell project, where we ask people to help us to accurately find the nuclear envelope (the membrane surrounding the nucleus) in a collection of electron microscopy images. The images are taken slice-by-slice through many different cells embedded in resin.

The nature of the images means that powerful computers struggle to tell apart the different structures, whereas a person with a modest amount of training is able to recognize and trace around specific structures with high accuracy.

The electron microscopes that we use to capture these images are technological wonders, able to image objects like cells in exquisite detail, seeing structures down to a few nanometers in scale (a nanometer is millionth of a millimeter – a human hair is around 100,000 nanometers in diameter).

Even though each cell is tiny, it might be imaged as a sequence of hundreds or thousands of images, each of which could be 100 megapixels in size. This can require many gigabytes or even terabytes of storage. The imaging is performed automatically, placing the scientific bottleneck firmly at the image analysis stage. We hope that, by using the superior visual skills of humans, we will be able to process our data more efficiently, as well as training future generations of computational algorithms up to human-level accuracy.

As well as helping us deal with the science, this type of project is an excellent way of engaging the public with research. We have found one of the unexpected benefits of our Etch a Cell project is that some schoolteachers have used it in their classrooms as a more engaging way to learn about cells. Rather than the simplified line drawings in ageing textbooks that make cells look like fried eggs, students are instead able to interact with real, raw scientific data. This gives them an insight into the nitty gritty of the research process, rather than just seeing the polished press release once a discovery has been made.

If you want to try out some citizen science projects, take a look at sites like SciStarter, SciFabric Crowdcrafting and the Zooniverse.

Come and see Martin talk about citizen science at SpotOn on the 3rd November at the Francis Crick Institute.

Comments