Even more serious consequences occur when fake news leads to violence. In 2018, more than twenty people were killed in India due to rumors about child abductions spreading on WhatsApp and other direct messaging apps.

What’s the solution?

In response, governments, social media companies and civil society organisations are all eager to find sustainable solutions to the problem.

Broadly speaking, there are four categories of such solutions: tweaking algorithms to disincentivise manipulative content and developing new ones to detect it; passing new legislation against creating and spreading fake news; improving existing fact-checking mechanisms; and investing in media literacy education. However, each of these approaches comes with serious downsides.

Detection algorithms aren’t 100% accurate and grey zone cases risk censorship and have backfiring potential. Human fact-checkers fare little better because they are incapable of keeping up with the volume of fake news that is being produced.

The continued influence effect of misinformation also ensures that people who have been exposed to misinformation often continue to believe in it, even after it has been debunked.

Finally, media literacy education has potential, but suffers from two issues: one, misinformation strategies evolve over time, making what you once learned less and less useful; and two, people who aren’t in education (e.g. the elderly) are often more vulnerable to receiving and spreading fake news.

This leaves us with an interesting problem: how do you prevent the spread of misinformation?

Enter inoculation theory: the science of prebunking

As behavioral scientists, we decided to focus on prevention rather than combating the problem of misinformation after it has already gone viral: prebunking rather than debunking. We draw on inoculation theory, a psychological framework from the 1960s that aims to induce pre-emptive resistance against unwanted persuasion attempts.

Just as the administration of a weakened dose of a virus (the vaccine) triggers antibodies in the immune system to fight off future infection, we reasoned that pre-emptively exposing people to weakened examples of common techniques that are used in the production of fake news would generate ‘mental antibodies’. After all, if enough individuals are immunized, the informational ‘virus’ won’t be able to spread.

Bad News

To do so, we developed a free online browser game, Bad News, in collaboration with the Dutch media literacy organisation DROG. In the game (Figure 1), players take on the role of an aspiring fake news tycoon: their task is to get as many followers as possible by actively spreading fake news, learning 6 common misinformation strategies in the process (including polarization, conspiracy theories, and the use of emotion in media).

The game works as a “vaccine” against misinformation by letting people actively reason their way through how misinformation works prior to being exposed to the “real” version on the internet.

Does it work?

We tested about 15,000 participants before and after playing using both fake (and real) headlines that included common disinformation techniques as part of content that participants hadn’t seen before.

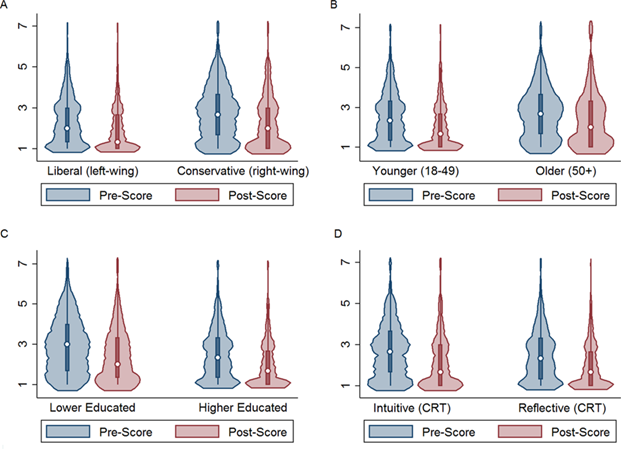

The results, which were recently published in Palgrave Communications, revealed that the intervention was effective. Figure 2 shows a violin plot of the mean reliability ratings of deceptive headlines on a 7-point scale prior to (blue) and after (red) playing, for various demographics. Participants significantly downgraded the reliability of fake (but not real) headlines after playing.

The effectiveness of Bad News as an anti-misinformation tool is emphasized by the fact that the game “works” across demographics including age, political ideology, and education levels.

It is important to note that the sample was not representative and the intervention was not randomized. Nonetheless, we have started to conduct randomized controlled trials with the game and the results further attest to the robustness of the intervention.

The game was also a huge success in non-academic ways. For example, we set out to develop an intervention that would be interesting enough for a broad slice of the population to engage with voluntarily.

Accordingly, more than half a million people played it so far, and the game received award-nominations from design companies, the Behavioral Insights Team, and positive reviews from gaming websites.

In collaboration with the UK Foreign Office, Bad News has now been translated into 13 languages, including Dutch, German, Czech, Polish, Greek, Esperanto, Swedish and Serbian.

These findings highlight the need to apply this approach in other domains where misinformation poses a threat.

For example, we are currently working with WhatsApp to combat misinformation on direct messaging apps.

Of course, “prebunking” cannot be the only solution. We view it as a first line of defense in what should be a multi-layered anti-misinformation strategy for the post-truth era, one that combines insights from behavioral science with those from computer science, education, and public policy.

Comments