#peerrevwk15 News: Crediting our Amazing Reviewers with DOIs

That Was The Review Week That Was

If you’ve seen the #peerrevwk15 hashtag on social media, you may have noticed this week is the first ever Peer Review Week. Like Open Access Week in a few weeks time (watch this space for some events we’ll be participating in) this is a great opportunity to throw some light on what goes on “under the hood” in academic publishing, as well as encourage innovation and uptake of more open and transparent research practices.

If you’ve seen the #peerrevwk15 hashtag on social media, you may have noticed this week is the first ever Peer Review Week. Like Open Access Week in a few weeks time (watch this space for some events we’ll be participating in) this is a great opportunity to throw some light on what goes on “under the hood” in academic publishing, as well as encourage innovation and uptake of more open and transparent research practices.

As transparency is a key part of our reproducibility and openness drive we are big fans of open peer review, and are also keen experimenters and early adopters of many of the novel platforms and schemes trying to update and improve upon this fundamental but imperfect system of assessing academic research. We’ve written much in this blog on our policies and experiences with open peer review, and have summarized much of it in an editorial we published in 2013. Since then we’ve continued to learn from and adapt our policies, collaborating with Publons and AcademicKarma (see the interviews with Andrew Preston of Publons and Lachlan Coin of AcademicKarma), and being featured journals for both. Peer review week is the perfect time for an update on where we are, and we have a few announcements to make on policy and technical changes to this process.

I Believe That Open Peer Review is the Future.

We don’t need to say too much on the benefits of Open Peer Review, as Mick Watson does a fantastic job in his impassioned critique of why anonymous peer review is bad for science here, and the arguments against it regarding reviewers being more fearful of criticism have been successfully debunked by the reams of data from the many medical journals like BMJ and the BMC Series medical journals that have been carrying out open review for over decade and half. There are slight costs in terms of time of review (less than a day), and the numbers of reviewers per paper you need to invite (BMC has great data showing it is 5% harder to find reviewers), but we think the benefits in much better quality reviews far out weight the overheads. With PLOS announcing they were moving PLOS ONE, the worlds largest journal, toward more transparent peer review this will be a major step to it becoming the norm. For more conservative journals that still haven’t discovered the benefits, the fact that over 45,000 reviewers have taken matters into their own hands and posted their reviews on the Publons platform means that they will become increasingly open review journals whether they want to or not.

Updating where we are since the Editorial, there was a slight setback with BMC changing some of their policies and removing some of the pre-publication information from our records, but we are now capturing all of these missing links and putting them in a public Editor’s comment (see this pre-pub history for example). Thanks to our integration with the Publons platform we are also including all of this information in our Publons review pages. As only two reviewers to date have asked to be anonymous on their reports, we have now removed our option to opt-out of named peer review and are updating our reviewer instructions to state so. We are also encouraging reviewers to follow the peer-review oath, and are updating our reviewer instructions at the same time. If you have seen in our editorial in July we are also trialling a more detailed reproducibility checklist, and reviewers are asked to specifically check a number of points and to confirm the information has been satisfactorily reported and reviewed.

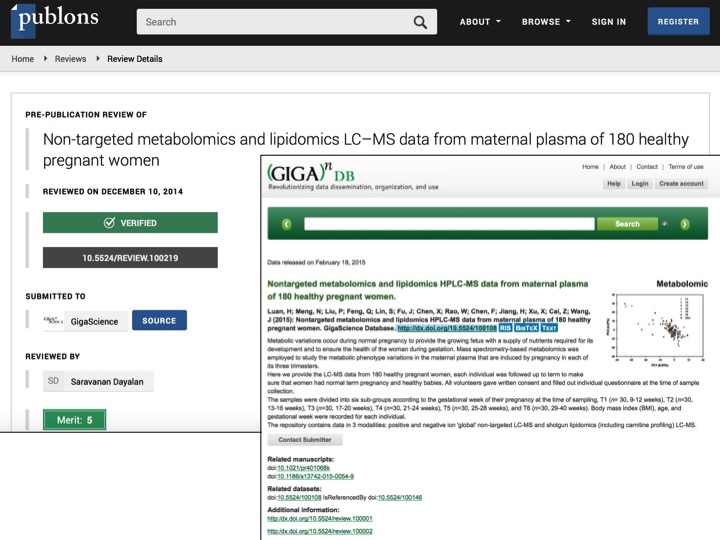

Data Reviews Are Coming. You Review and you DOI.

Our reviewers are fantastic, and on top of making their efforts more transparent we feel it is important to give them some form of formal credit for the hard work they do. Thanks to our partners at Publons and Datacite we can now do this by assigning DOIs to all our peer review. This makes them searchable on via the many sources that index this such as DataCite metadata search, The Thomson Reuters Data Citation Index, and the OSF Share registry. We’ve manually added DOIs to resolve to the over 300 reviews we currently have in Publons, and have now created a pipeline using the Publons and DataCite APIs to automate this process. If any other publishers wish to reuse and build upon this functionality we have made it available via our GitHub repository. As with the Mozilla/ORCID/BMC/Ubiquity Contributorship badges project just announced this week that we are participating in, we are keen to disambiguate and credit the different roles in the research cycle. Having citable DOIs for our GigaDB hosted datasets allows researchers to add them to their ORCID profiles, and a new feature we are integrating in our GigaDB entries is to highlight and link the DOIs to any datasets that have been peer reviewed in conjunction to a GigaScience article. You can see an example here with the DOIs to the reviews linked from the Additional information section, and in the next version of the database they will be more prominently highlighted.

Our reviewers continue to amaze us with the cutting edge ways they interact with our data-heavy and computationally complicated papers, and without prompting we’ve seen them blogging their reviews in a real-time open peer review process, and even posting them on GitHub, and posting pull requests as part of the process. Making all our peer reviews open and CC-BY by default, we’ve also been surprised by how many people read them from popular papers. With much work to follow from the many working groups tackling standards and practices for peer review, and our collaborations with Publons and others, we will continue to adapt and improve upon this process, so keep following this blog to see how it develops.

Our reviewers continue to amaze us with the cutting edge ways they interact with our data-heavy and computationally complicated papers, and without prompting we’ve seen them blogging their reviews in a real-time open peer review process, and even posting them on GitHub, and posting pull requests as part of the process. Making all our peer reviews open and CC-BY by default, we’ve also been surprised by how many people read them from popular papers. With much work to follow from the many working groups tackling standards and practices for peer review, and our collaborations with Publons and others, we will continue to adapt and improve upon this process, so keep following this blog to see how it develops.