The extent of STEM literature has become truly staggering. In 2015, Scopus identified over 2.45 million citable items published in that year alone. This number includes books and conference abstracts as well as journal articles. In terms of journal articles, in 2015, Web of Science indexed nearly 1.8 million articles and reviews, while Scopus indexed 1.7 million. These numbers reflect what has been identified by some as an exponential growth in scientific literature.

All of these 1.7 million manuscripts were assessed and peer reviewed prior to publication. A Taylor and Francis survey found that peer reviewers spend between four and six hours reviewing a paper. Assuming that each manuscript is seen by two reviewers, the amount of research time spent on peer review may have been on the order of between 13 and 20 billion person-hours in 2015. This is of course a rough calculation, as some fields rely on one peer reviewer and the editor’s own comments, while other journals try to find three reviewers. The estimate doesn’t account for papers that were sent for review at multiple journals, papers that were sent out for review but never published, or time spent on a second (or third) review.

It is thus clear that reviewing papers is a large burden on researchers’ time. A recent survey of professors at Boise State University found that peer review comprises 1.4% of academic work-time. Although this value is low in terms of absolute time spent working, it is comparable to the amount of time spent on writing (2.2%)

and research development (1.8%), suggesting that peer review is an important component of these researchers’ ’ core research/publication work time.

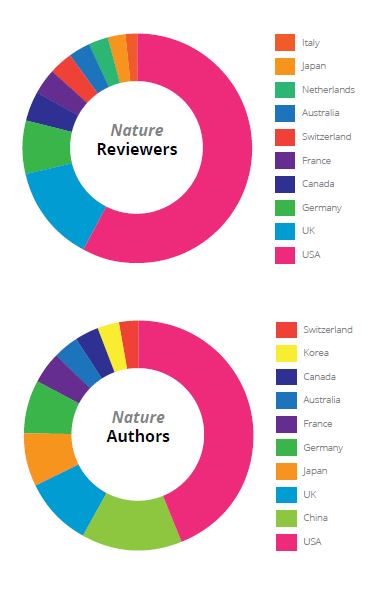

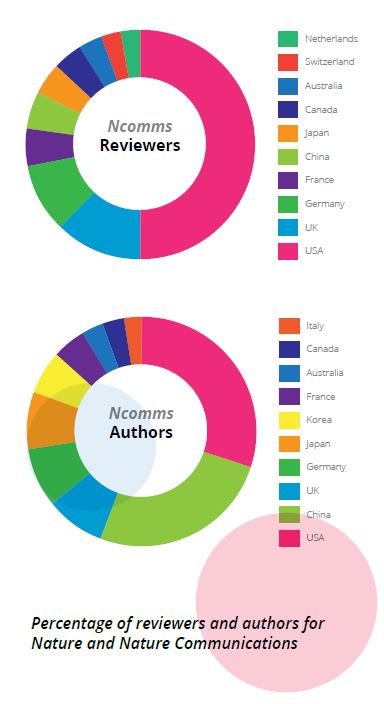

But are these efforts equally distributed among researchers? The answer is generally no, and it varies by publisher. Wiley’s 2015 reviewer survey found that US scientists reviewed 33% and 34% of health and life science papers, respectively, while publishing 22% and 24% of papers. A similar divide exists at two Nature Research

titles, Nature and Nature Communications. In 2016, Americans contributed 50% of reviews, while comprising 36% of corresponding authors of submitted papers at Nature. At Nature Communications, scientists from the USA make up 40% of reviewers and 24% of corresponding authors. UK reviewers are slightly overrepresented in

both the Nature Research and Wiley titles surveyed, while scientists from countries such as Germany and Japan submit and review manuscripts roughly in proportion.

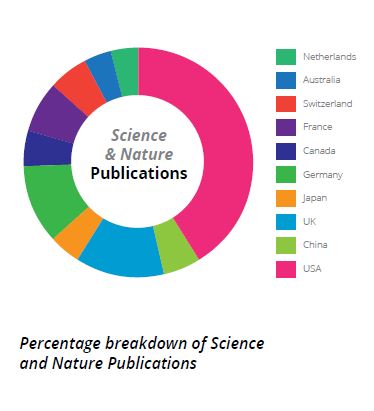

Meanwhile, scientists from countries such as China and Korea are submitting to Nature Research titles, but not reviewing as frequently: Chinese scientists were corresponding authors on 21% of Nature Communications submissions and 11% of Nature submissions, but they made up only 4% and <1% of reviewers, respectively. Although the geographic provenance of submissions may not exactly reflect the geographic distribution of papers sent for review or accepted, contributions from China nonetheless represent 9% of papers published in Science and Nature in 2015 (compared to 30% for the USA), suggesting that competence is not the limitation to finding

reviewers from China.

There is also a noticeable gender bias in reviewer pools. A subset of Wiley journals – those published by the American Geophysical Union (AGU) – found that 20% of reviewers were female, whereas 27% of first authors and 28% of AGU members were female; this disparity persisted over all career stages. The authors of this survey

There is also a noticeable gender bias in reviewer pools. A subset of Wiley journals – those published by the American Geophysical Union (AGU) – found that 20% of reviewers were female, whereas 27% of first authors and 28% of AGU members were female; this disparity persisted over all career stages. The authors of this survey

attributed this result in part to a strong gender imbalance in reviewer recommendations. At Nature, where editors may rely less heavily on recommended reviewers, 22% of reviewers across all disciplines were women in 2015; a strong imbalance in the gender of recommended reviewers was also recorded.

The amount of scientific  literature published is growing, with one estimate suggesting a growth rate of 8-9% per year. This means that the amount of time spent reviewing is growing too. If peer review is going to be sustainable, editors and publishers will have to find ways to reach new reviewers. Looking at the AGU journal data, one initial solution may lie with authors: asking authors to provide suggestions that reflect varying gender, location and career stage would not only help editors, but also encourage researchers to actively seek out literature that may be missing from their standard citation lists. With the amount of new literature entering the field each year, it can be all too easy for researchers to limit their reading to certain journals or author groups, potentially to the detriment of the base their own work stands on.

literature published is growing, with one estimate suggesting a growth rate of 8-9% per year. This means that the amount of time spent reviewing is growing too. If peer review is going to be sustainable, editors and publishers will have to find ways to reach new reviewers. Looking at the AGU journal data, one initial solution may lie with authors: asking authors to provide suggestions that reflect varying gender, location and career stage would not only help editors, but also encourage researchers to actively seek out literature that may be missing from their standard citation lists. With the amount of new literature entering the field each year, it can be all too easy for researchers to limit their reading to certain journals or author groups, potentially to the detriment of the base their own work stands on.

Training editors on unconscious bias and setting targets for reviewer diversity across journals and publishers would be another avenue that could be explored with relative ease. But editors are ultimately responsible for what they publish, and they need to be able to know and trust their reviewers’ expertise and broader knowledge of the field. For reviewers with a long list of discoverable publications, this is easy to assess. But for reviewers with a commonly-found name that thwarts easy Web of Science searches, or a relatively short publication list, editors may feel they are taking a risk inviting these individuals to review. Better ways of tracking people who are

already reviewing for other journals such as tying ORCID numbers to reviews, or recording reviews on Publons could help match editors to experienced referees.

For those who have never reviewed before, a panel at the 2016

SpotOn conference suggested that a reviewer training program

could be helpful, especially if it ended in some sort of well-recognized accreditation. Such a program would provide students (or those further along in their careers) with hands-on experience of reviewing

combined with feedback from one or more mentors. A database of credentialed individuals could provide an additional avenue for editors to find diverse, competent reviewers.

But opening up reviewing to all scientists is not just about meeting journal targets. Reviewers report that they enjoy feeling that they are playing their part in the academic community, and that they like to see new works and help improve them. Moreover, they feel that reviewing improves their own reputation and standing in the community.

But opening up reviewing to all scientists is not just about meeting journal targets. Reviewers report that they enjoy feeling that they are playing their part in the academic community, and that they like to see new works and help improve them. Moreover, they feel that reviewing improves their own reputation and standing in the community.

Amongst scientists, peer review is seen as key to inducing confidence in the literature. And despite technological advances in, for instance, automated checks of statistics, few envision a publishing model without pre-publication peer review. But for peer review to remain sustainable in an era of ever-growing scientific output, we must ensure that our base of peer reviewers becomes as global and diverse as the pool of scientists publishing their work.

Acknowledgements:

Many thanks to Elisa De Ranieri for contributing Nature

Research data and providing feedback on a draft.

Comments